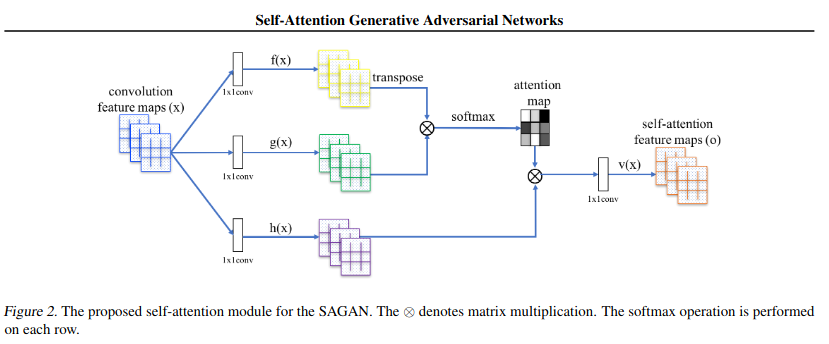

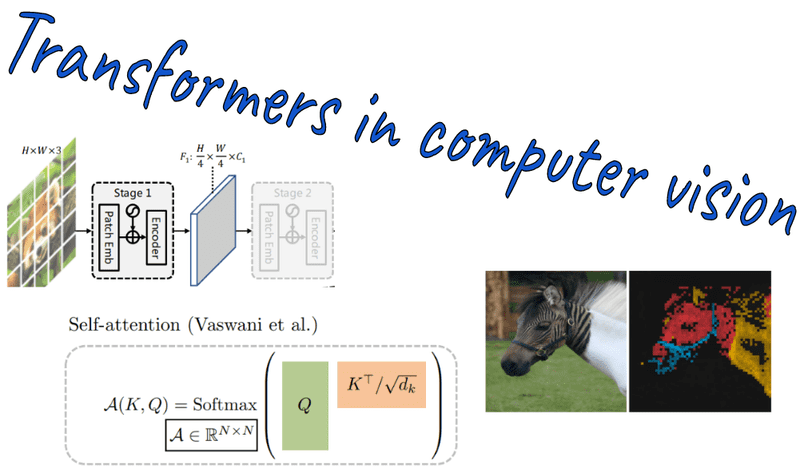

A Survey of Attention Mechanism and Using Self-Attention Model for Computer Vision | by Swati Narkhede | The Startup | Medium

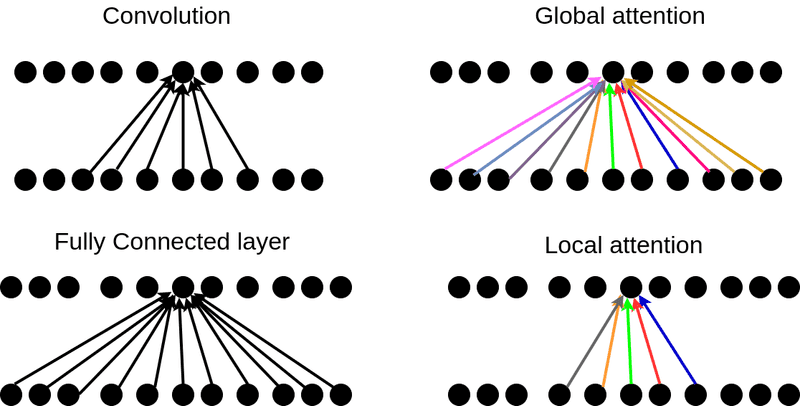

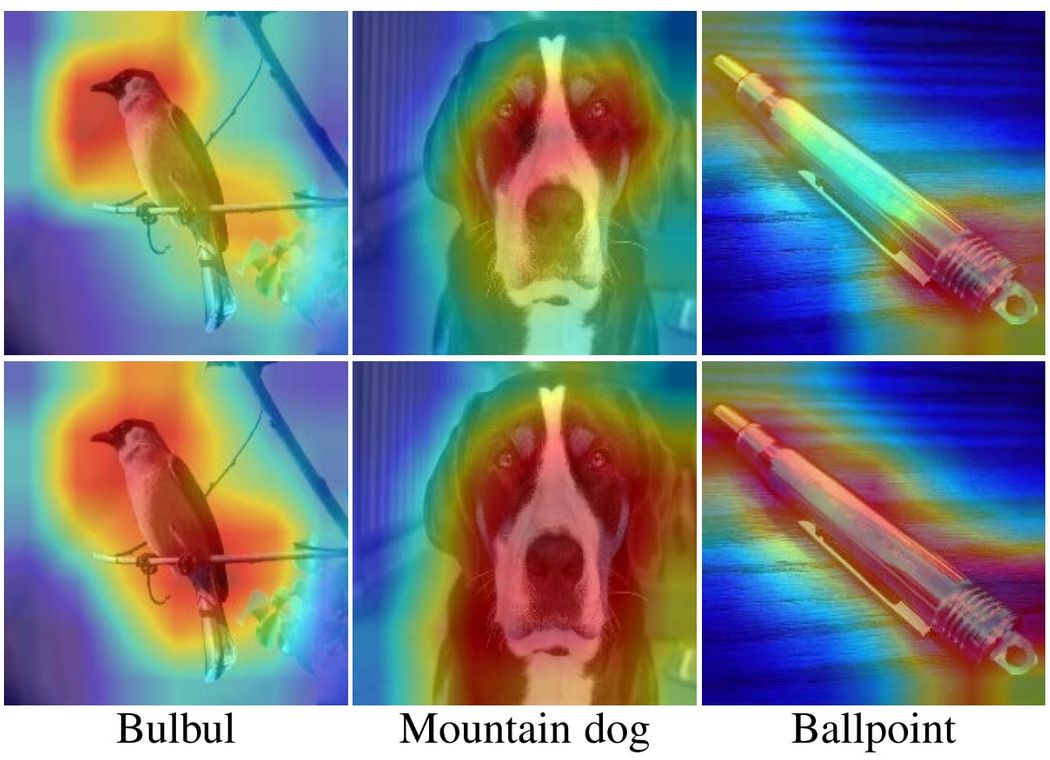

New Study Suggests Self-Attention Layers Could Replace Convolutional Layers on Vision Tasks | Synced

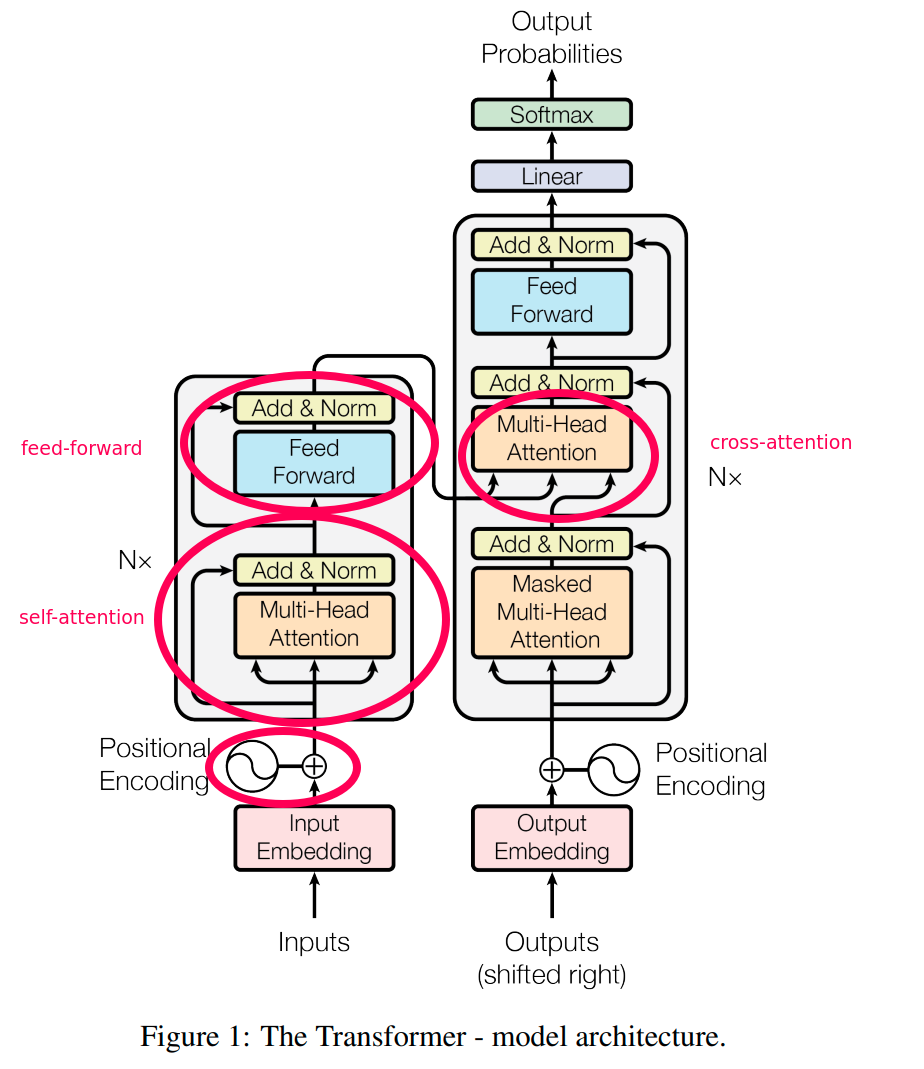

Vision Transformers: Natural Language Processing (NLP) Increases Efficiency and Model Generality | by James Montantes | Becoming Human: Artificial Intelligence Magazine

AK on Twitter: "Attention Mechanisms in Computer Vision: A Survey abs: https://t.co/ZLUe3ooPTG github: https://t.co/ciU6IAumqq https://t.co/ZMFHtnqkrF" / Twitter

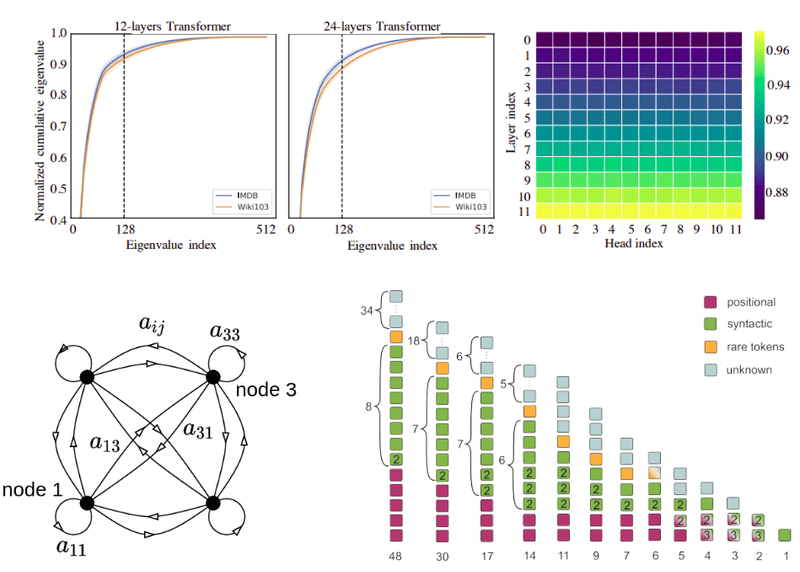

Chaitanya K. Joshi on Twitter: "Exciting paper by Martin Jaggi's team (EPFL) on Self-attention/Transformers applied to Computer Vision: "A self- attention layer can perform convolution and often learns to do so in practice."

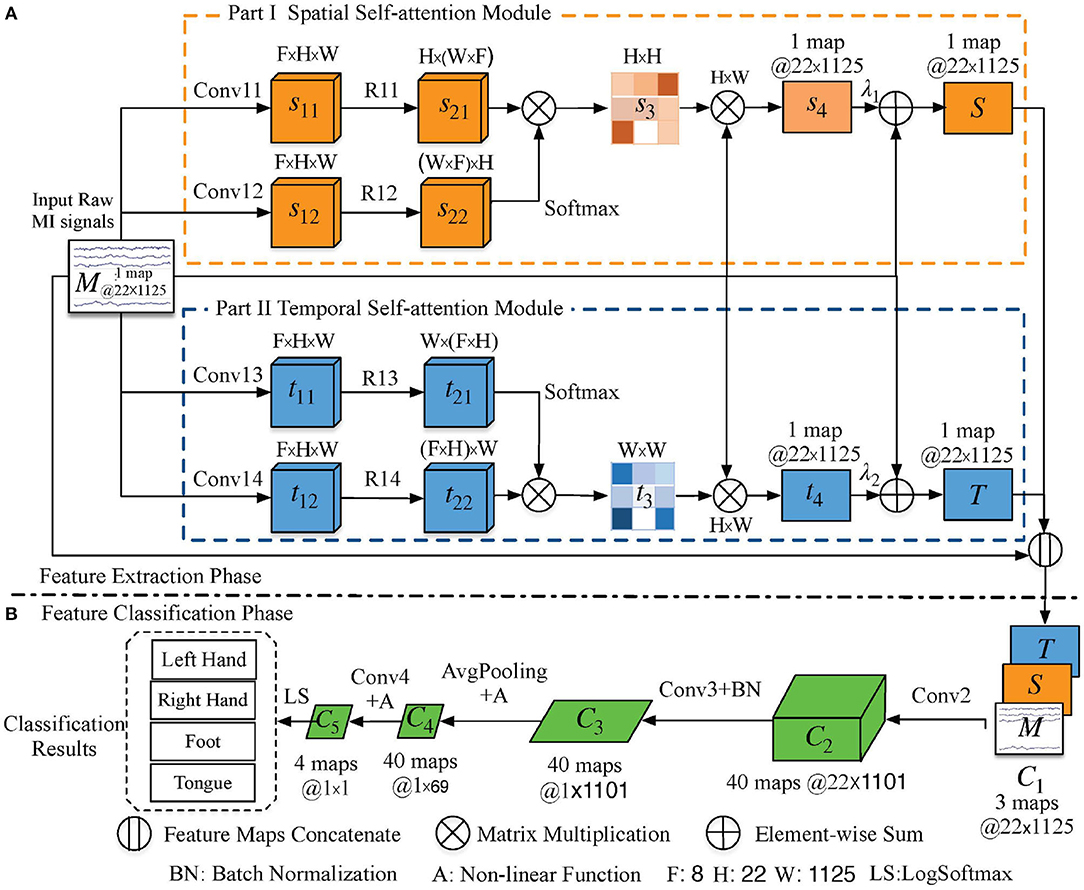

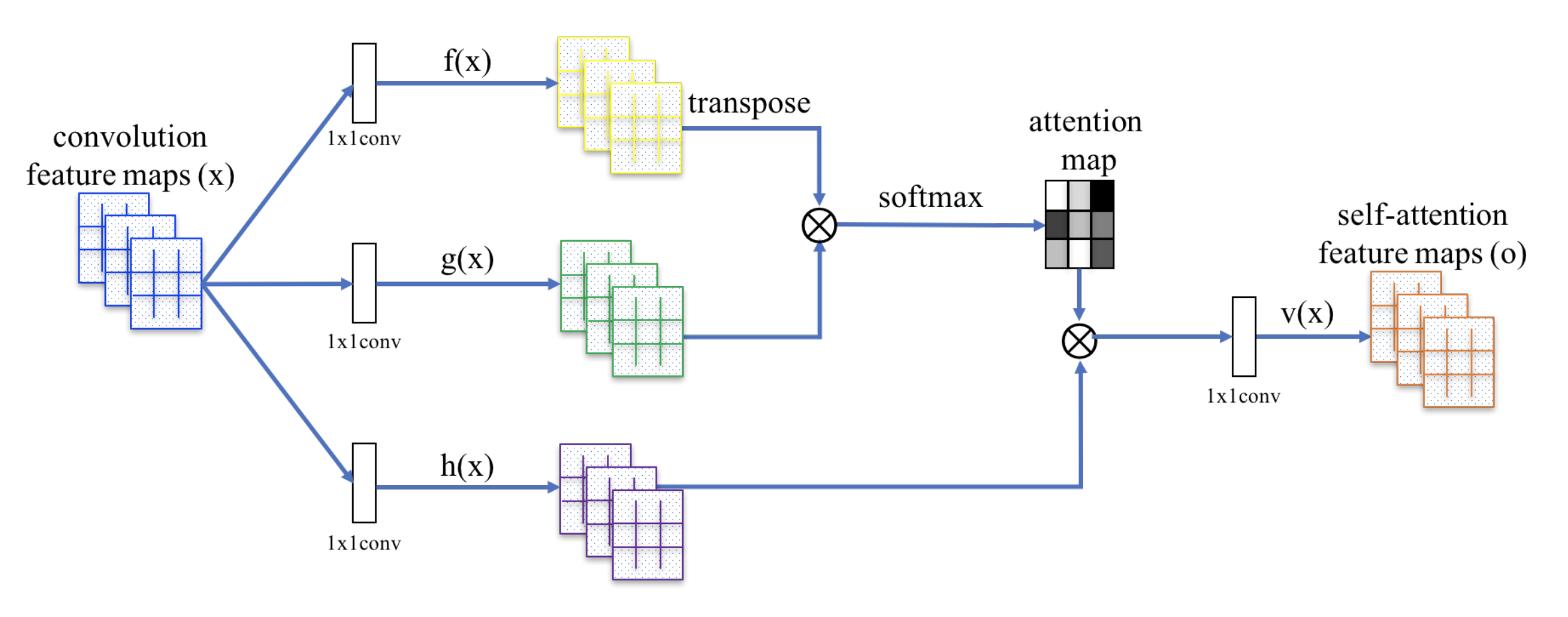

Spatial self-attention network with self-attention distillation for fine-grained image recognition - ScienceDirect