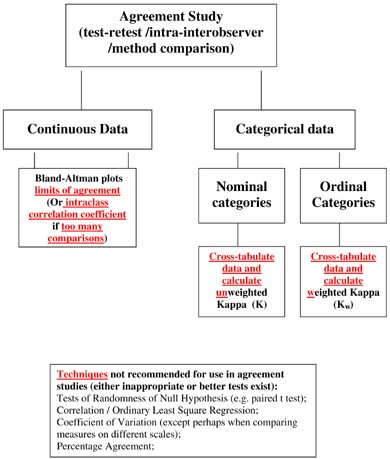

ASSESSING OBSERVER AGREEMENT WHEN DESCRIBING AND CLASSIFYING FUNCTIONING WITH THE INTERNATIONAL CLASSIFICATION OF FUNCTIONING, D

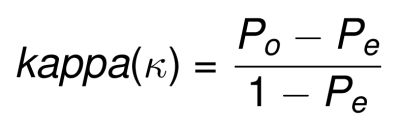

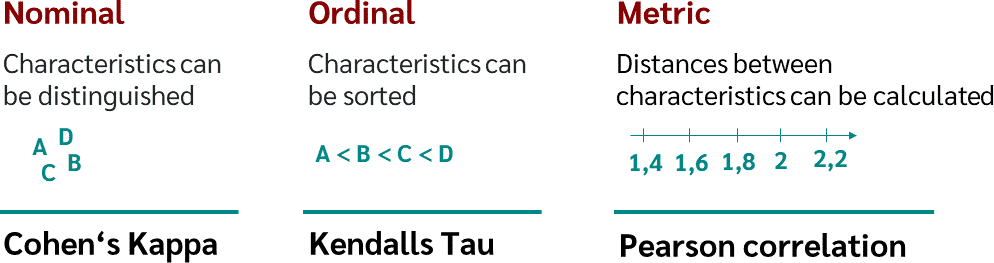

The Equivalence of Weighted Kappa and the Intraclass Correlation Coefficient as Measures of Reliability - Joseph L. Fleiss, Jacob Cohen, 1973

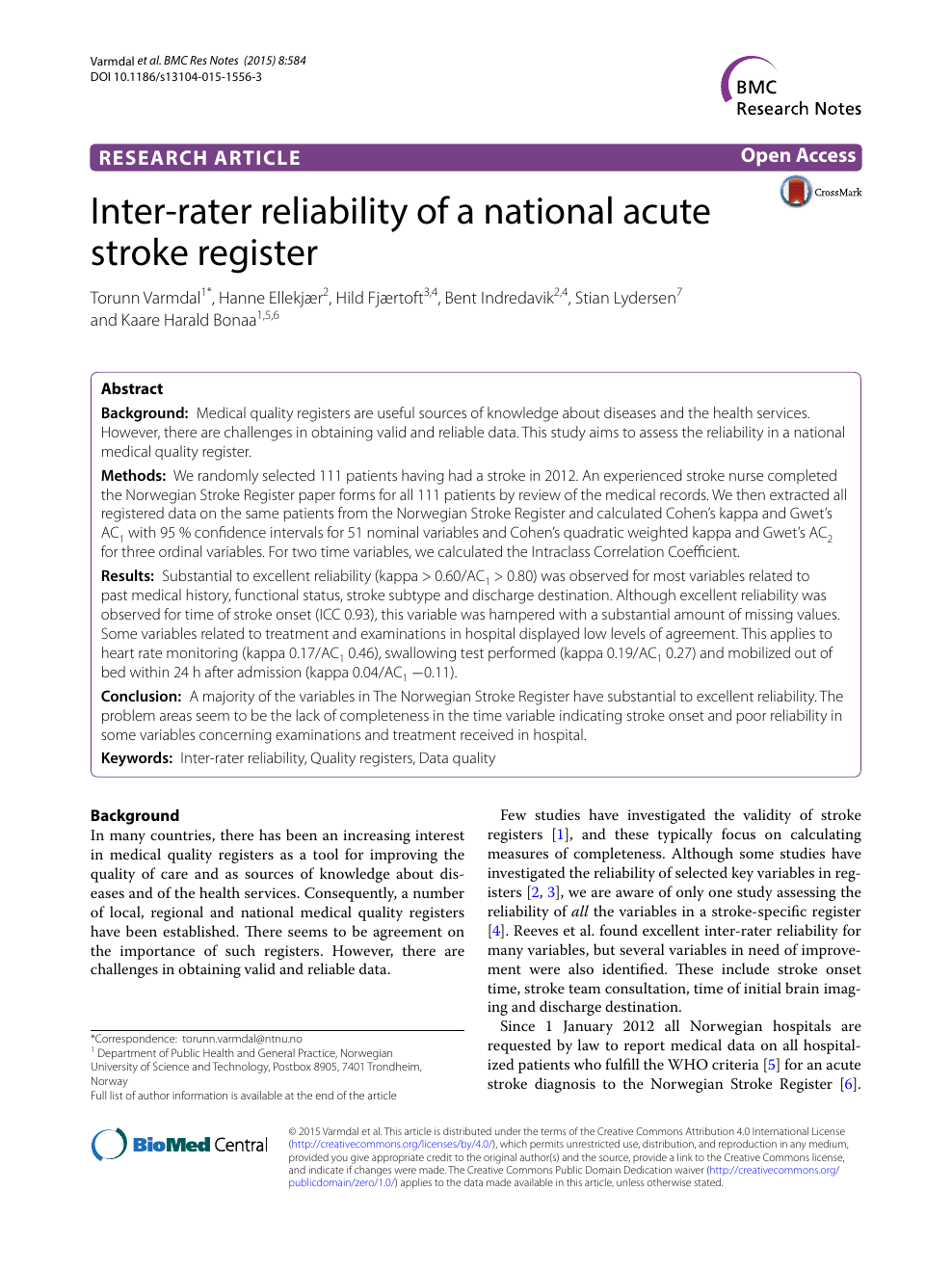

Inter-rater reliability of a national acute stroke register – topic of research paper in Clinical medicine. Download scholarly article PDF and read for free on CyberLeninka open science hub.

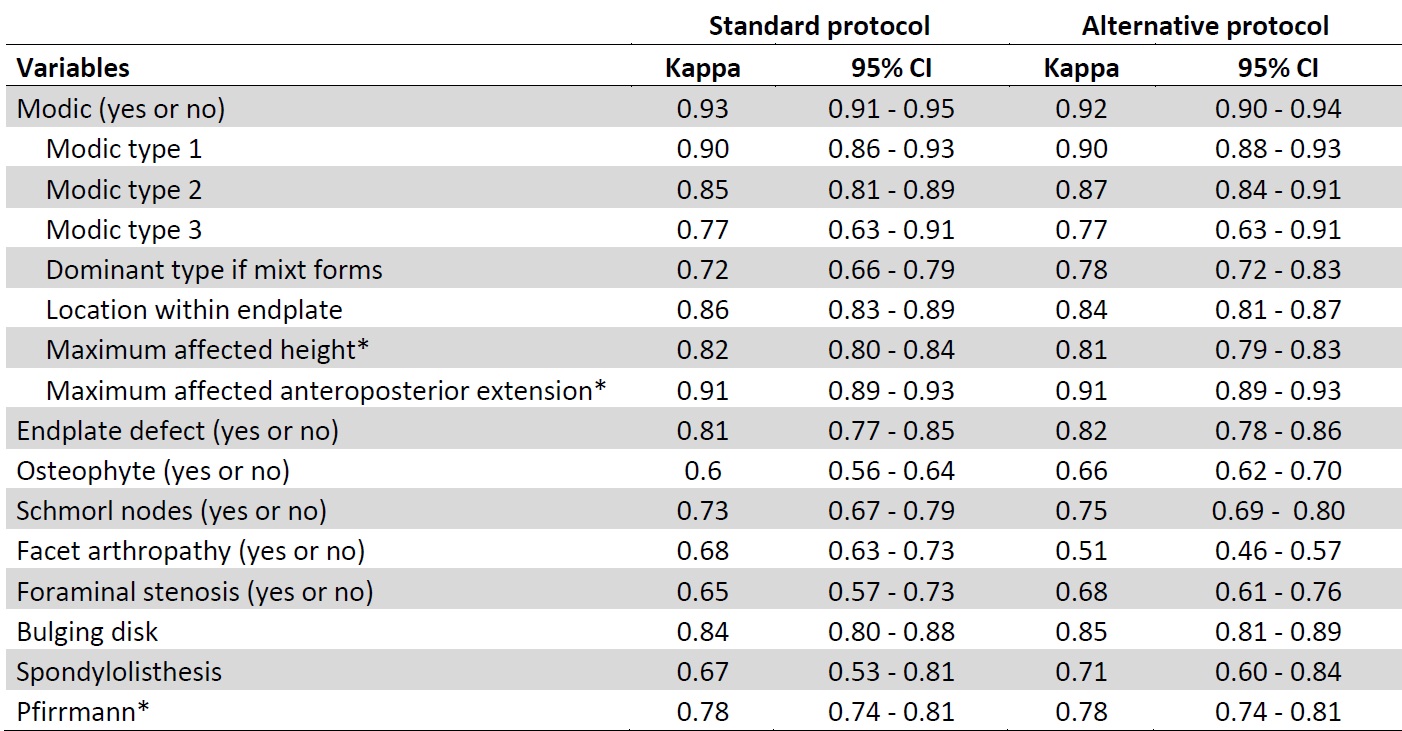

Intra and Interobserver Reliability and Agreement of Semiquantitative Vertebral Fracture Assessment on Chest Computed Tomography | PLOS ONE

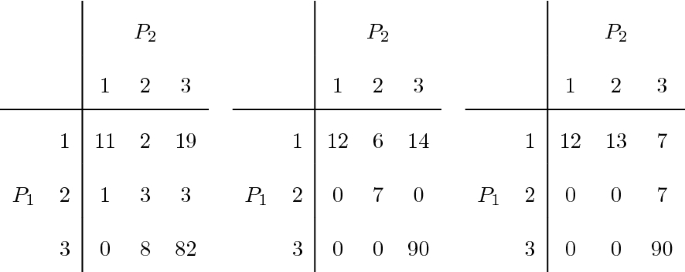

The results of the weighted Kappa statistics between pairs of observers | Download Scientific Diagram

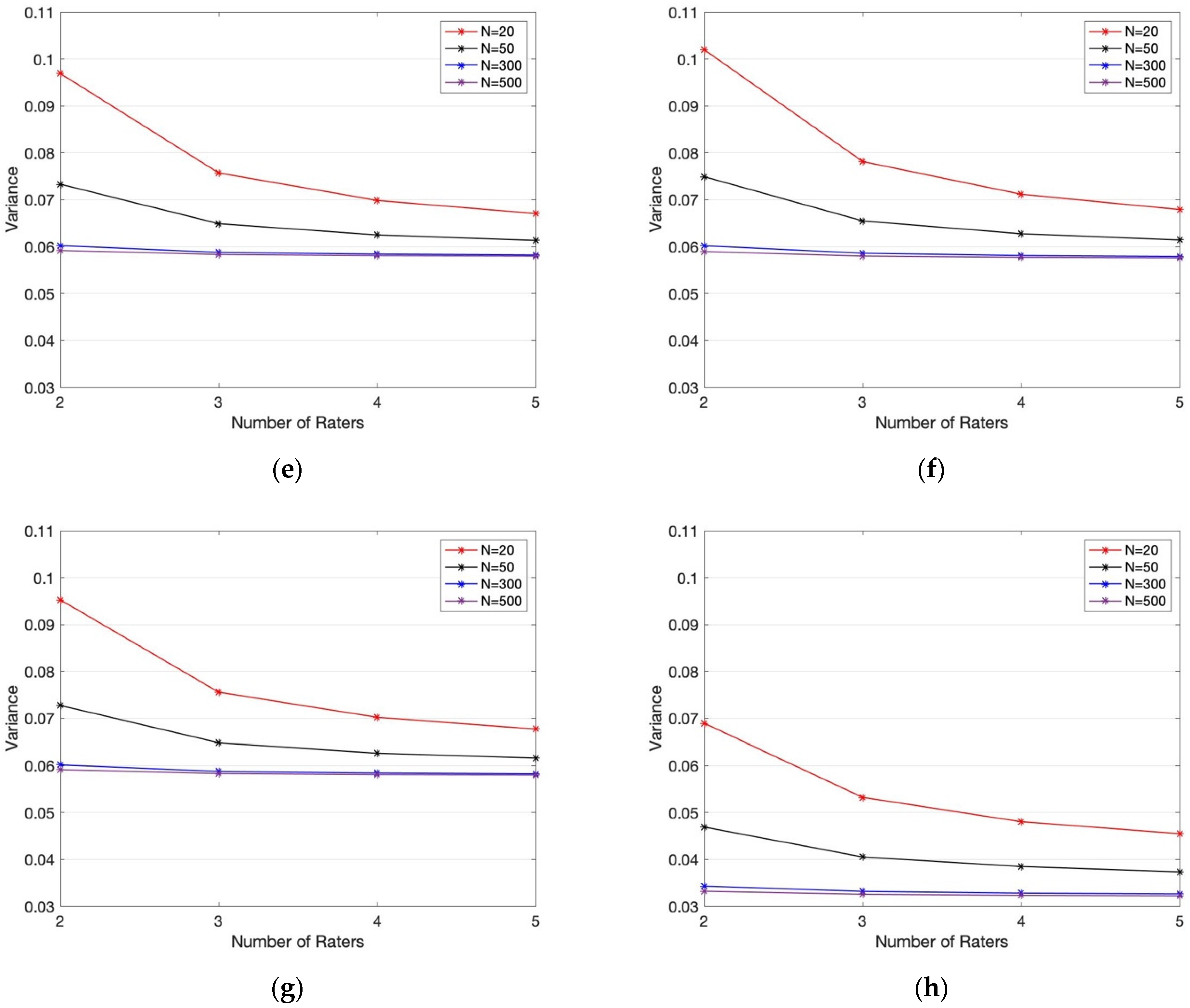

Symmetry | Free Full-Text | An Empirical Comparative Assessment of Inter-Rater Agreement of Binary Outcomes and Multiple Raters

Summary measures of agreement and association between many raters' ordinal classifications - ScienceDirect

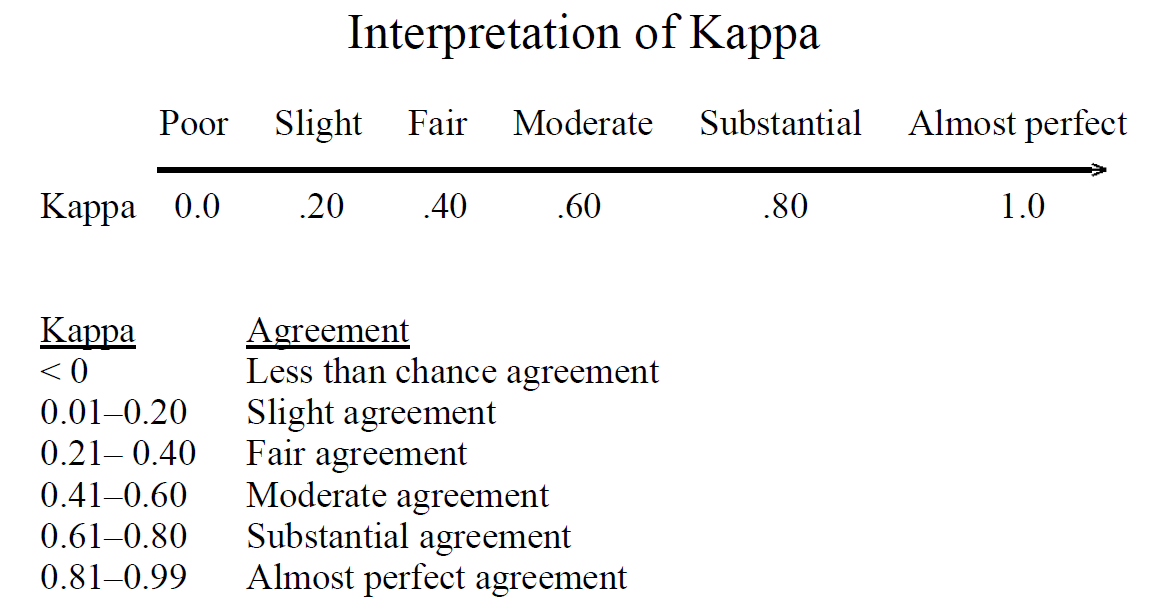

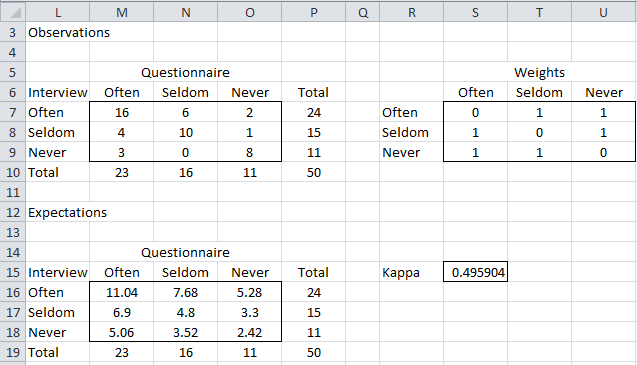

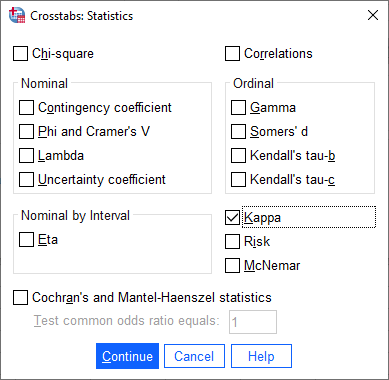

Cohen's kappa in SPSS Statistics - Procedure, output and interpretation of the output using a relevant example | Laerd Statistics